PROJECTS

EARTH : Excavation Autonomy with Resilient Traversability and Handling

Excavators, earth-movers, and large construction vehicles have been instrumental in propelling human civilization forward at an unprecedented pace. Recent breakthroughs in computing power, algorithms, and learning architectures have ushered in a new era of autonomy in robotics, now enabling these machines to operate independently. To this end, we introduce EARTH (Excavation Autonomy with Resilient Traversability and Handling), a groundbreaking framework for autonomous excavators and earth-movers. EARTH integrates several novel perception, planning, and hydraulic control components that work synergistically to empower embodied autonomy in these massive machines. This three-year project, funded by MOOG and undertaken in collaboration with the Center for Embodied Autonomy and Robotics (CEAR), represents a significant leap forward in the field of construction robotics.

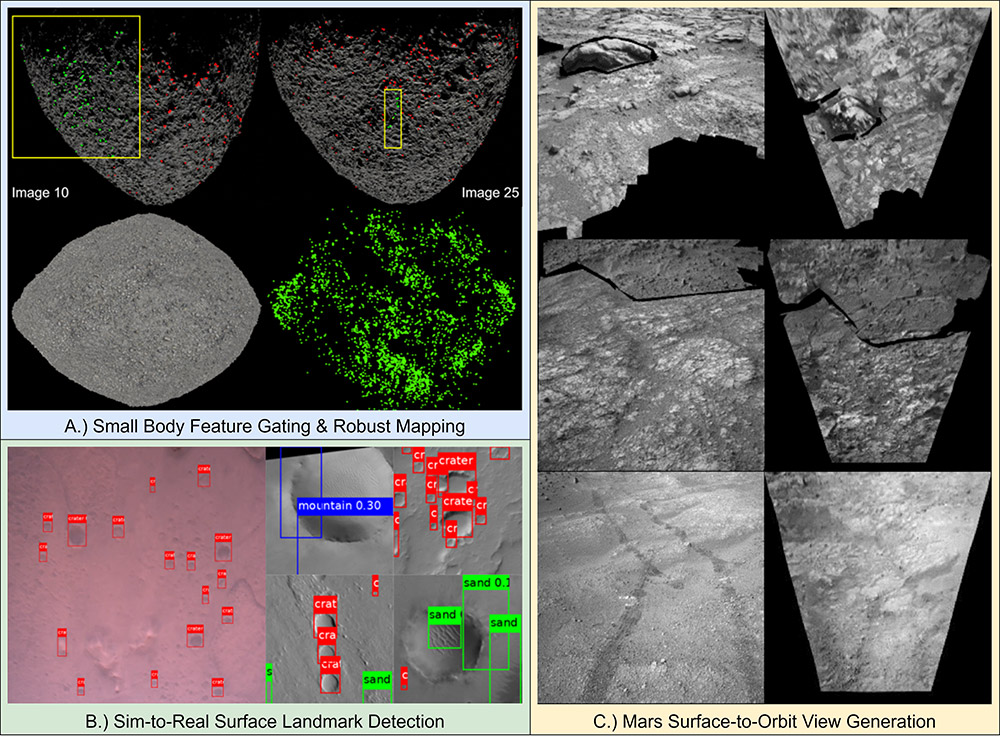

Visual Perception for Spacecraft Navigation

Visual perception is critical to situational awareness and scene understanding for spacecraft performing terrain-relative navigation (TRN) to planet and small body (e.g., asteroid) surfaces. Current techniques for optical TRN are severely limited in functionality and perceptual capability due to processing and memory limitations of the radiation-hardened flight computing infrastructure, requiring extensive a priori knowledge and data that is extremely costly to obtain and develop. With the advent of more powerful and capable flight processors comes the opportunity to deploy advanced computer vision algorithms considered standard for robotic systems here on Earth. However, methods that employ hand-crafted or learning-based features face their own set of challenges when applied to extreme space environments due to various factors, including the unstructured nature of the environment, the absence of labeled training data, and highly dynamic illumination conditions. This research explores techniques to overcome such challenges and enable true autonomy in optical spacecraft TRN.

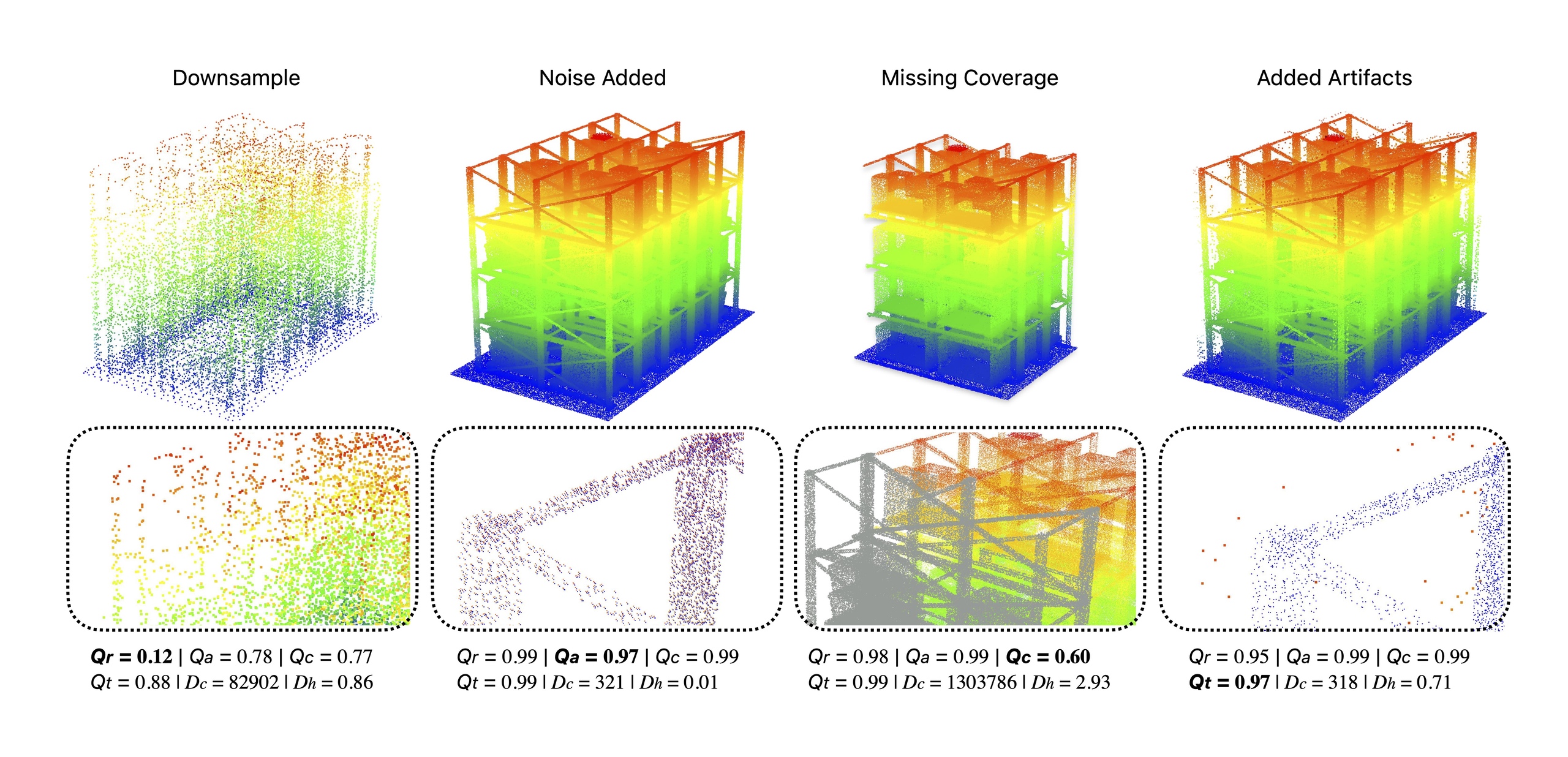

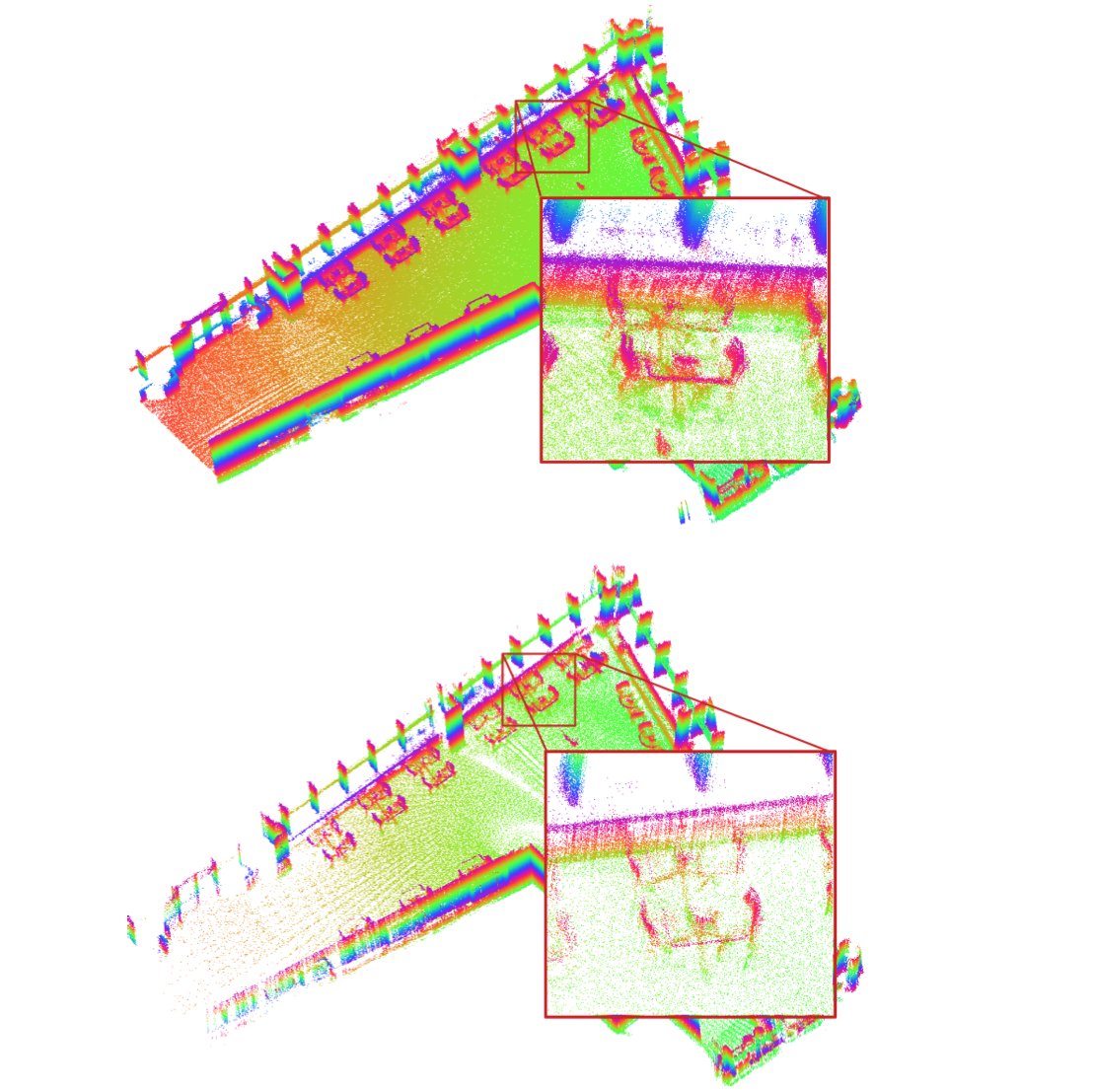

Point Cloud Quality Evaluation Framework

Advancements in sensors, algorithms and compute hardware has made 3D perception feasible in real-time. Current methods to compare and evaluate quality of a 3D model such as Chamfer, Hausdorff and Earth-mover’s distance are uni- dimensional and have limitations; including inability to capture coverage, local variations in density and error, and are significantly affected by outliers. In this paper, we propose an evaluation framework for point clouds (Empir3D) that consists of four metrics – resolution (Qr) to quantify ability to distinguish between the individual parts in the point cloud, accuracy (Qa) to measure registration error, coverage (Qc) to evaluate portion of missing data, and artifact-score (Qt) to characterize the presence of artifacts. Through detailed analysis, we demonstrate the complementary nature of each of these dimensions, and the improvement they provide compared to uni-dimensional measures highlighted above. Further, we demonstrate the utility of Empir3D by comparing our metric with the uni-dimensional metrics for two 3D perception applications (SLAM and point cloud completion). We believe that Empir3D advances our ability to reason between point clouds and helps better debug 3D perception applications by providing richer evaluation of their performance. Our implementation of Empir3D, custom real- world datasets, evaluation on learning methods, and detailed documentation on how to integrate the pipeline will be made available upon publication.

Project Page : https://droneslab.github.io/E3D/

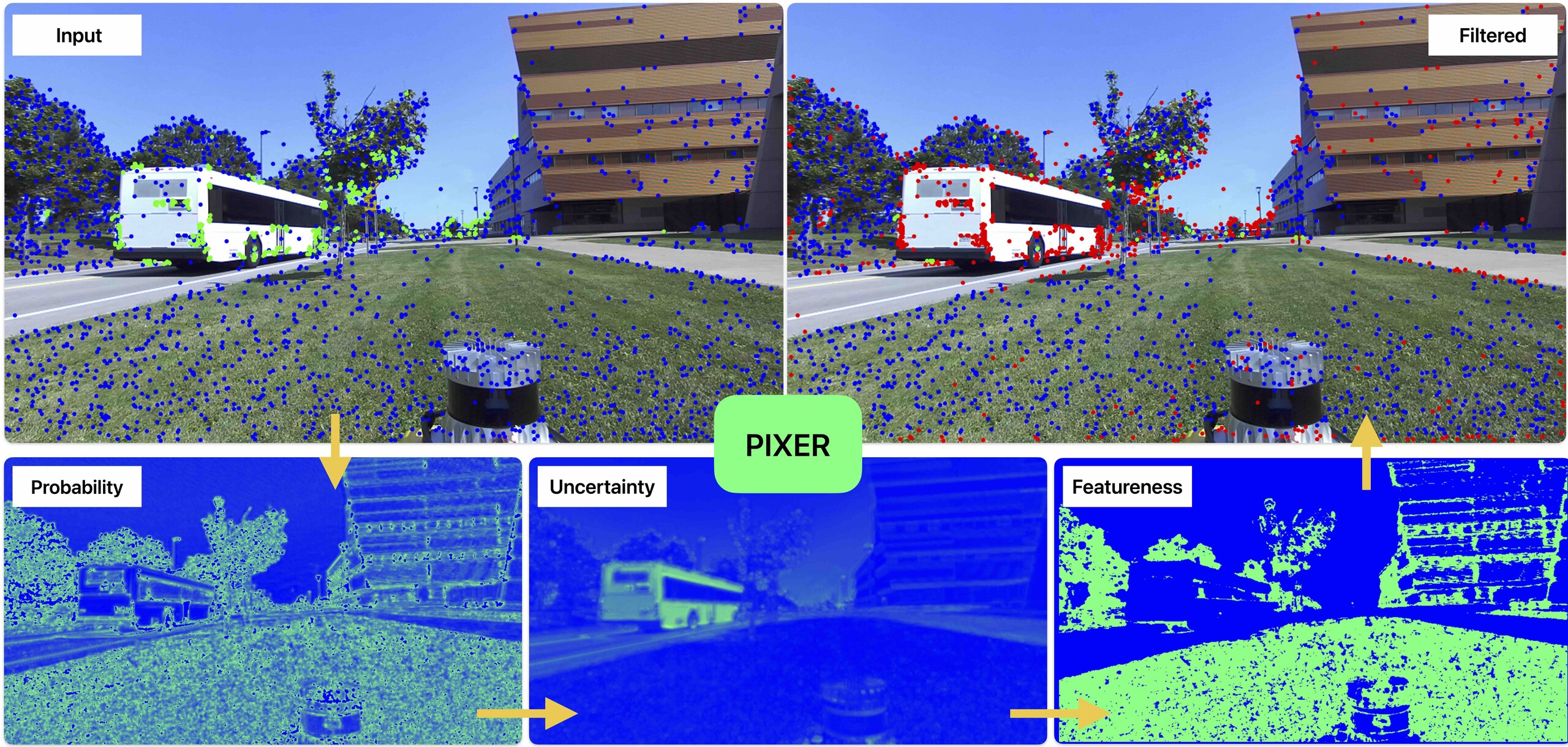

Learning Visual Information Utility with PIXER

Accurate feature detection is fundamental for various computer vision tasks including autonomous robotics, 3D reconstruction, medical imaging, and remote sensing. Despite advancements in enhancing the robustness of visual features, no existing method measures the utility of visual information be- fore processing by specific feature-type algorithms. To address this gap, we introduce PIXER and the concept of “Featureness”, which reflects the inherent interest and reliability of visual information for robust recognition independent of any specific feature type. Leveraging a generalization on Bayesian learning, our approach quantifies both the probability and uncertainty of a pixel’s contribution to robust visual utility in a single- shot process, avoiding costly operations such as Monte Carlo sampling, and permitting customizable featureness definitions adaptable to a wide range of applications. We evaluate PIXER on visual-odometry with featureness selectivity, achieving an average of 31% improvement in RMSE trajectory with 49% fewer features.

Project Page : https://droneslab.github.io/PIXER/

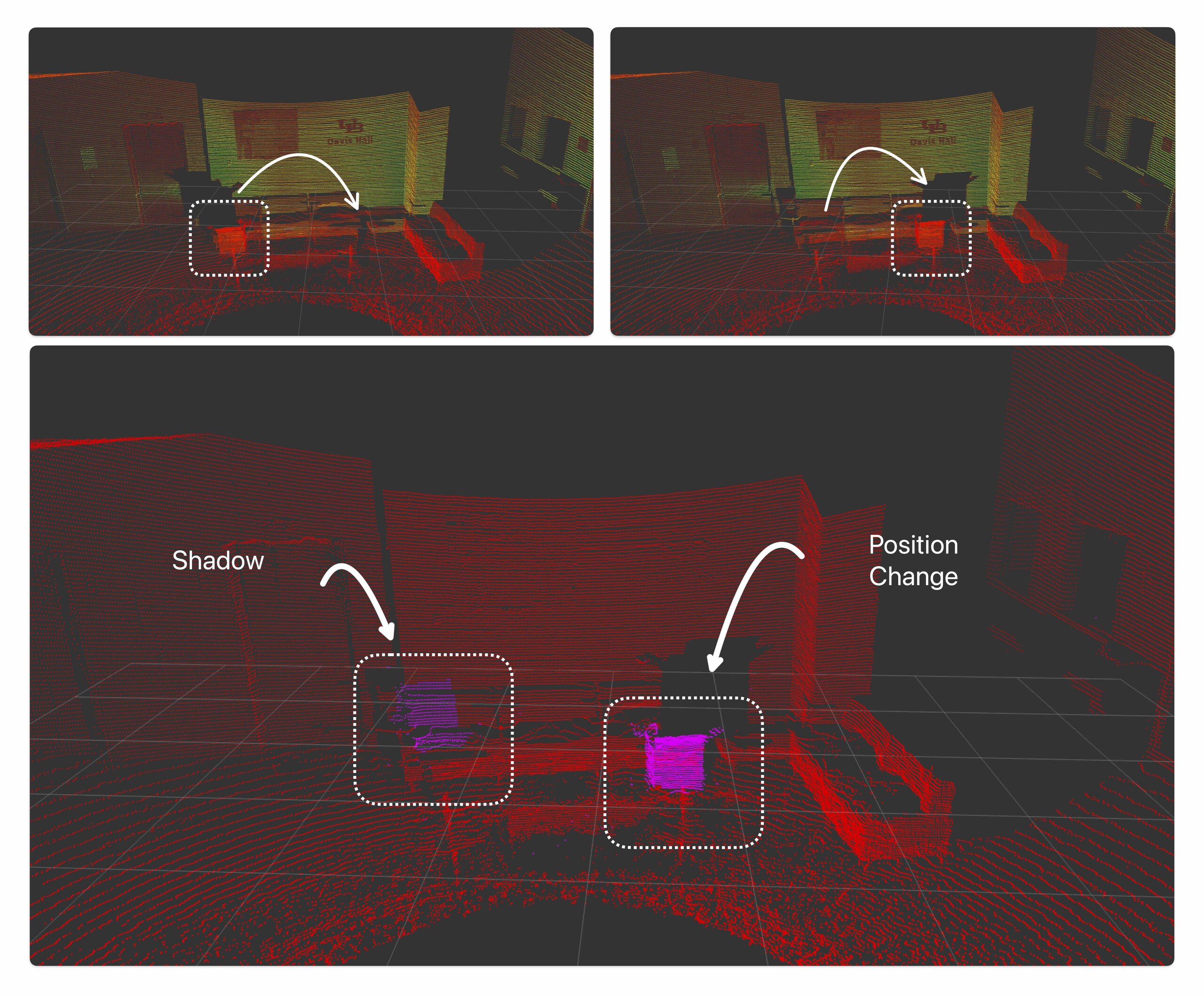

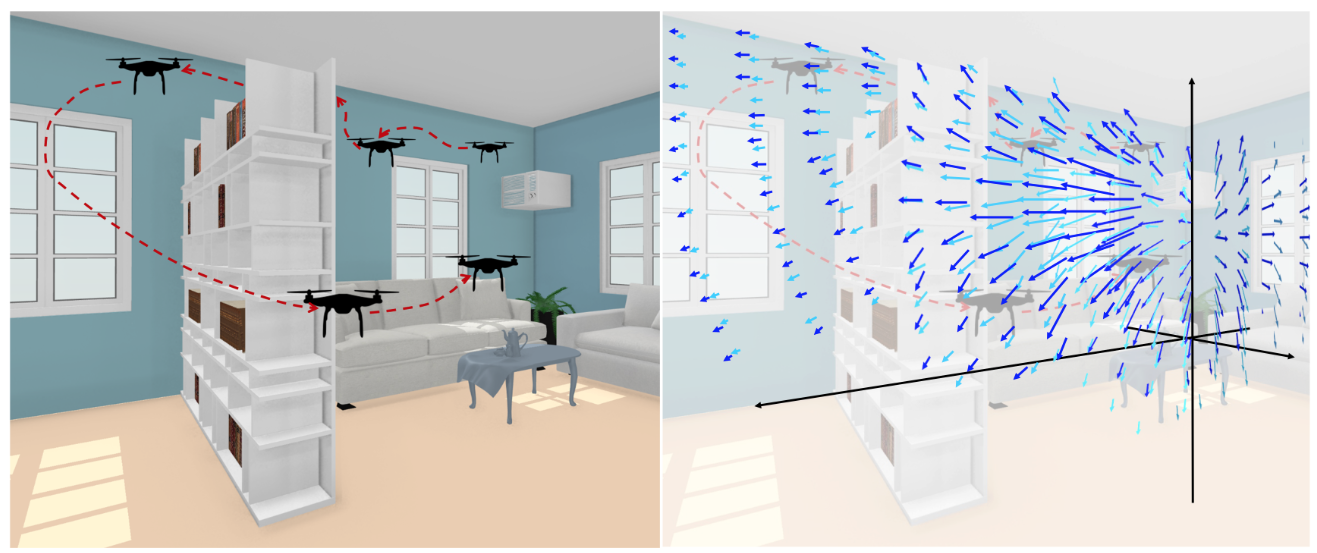

Active Mapping and Exploration

While passive mapping algorithms like SLAM do produce high-quality maps overall, they are not optimized to generate the best possible dense maps of variables of interest. We can substantially improve this for certain applications where certain quantities are more important than others. We demonstrate the value of exploration to scan certain objects of interest like chairs and tables in an indoor environment or to monitor physical quantities like airflow. We adapt information gain based exploration to make it feasible to solve such problems in the real world and in real time.

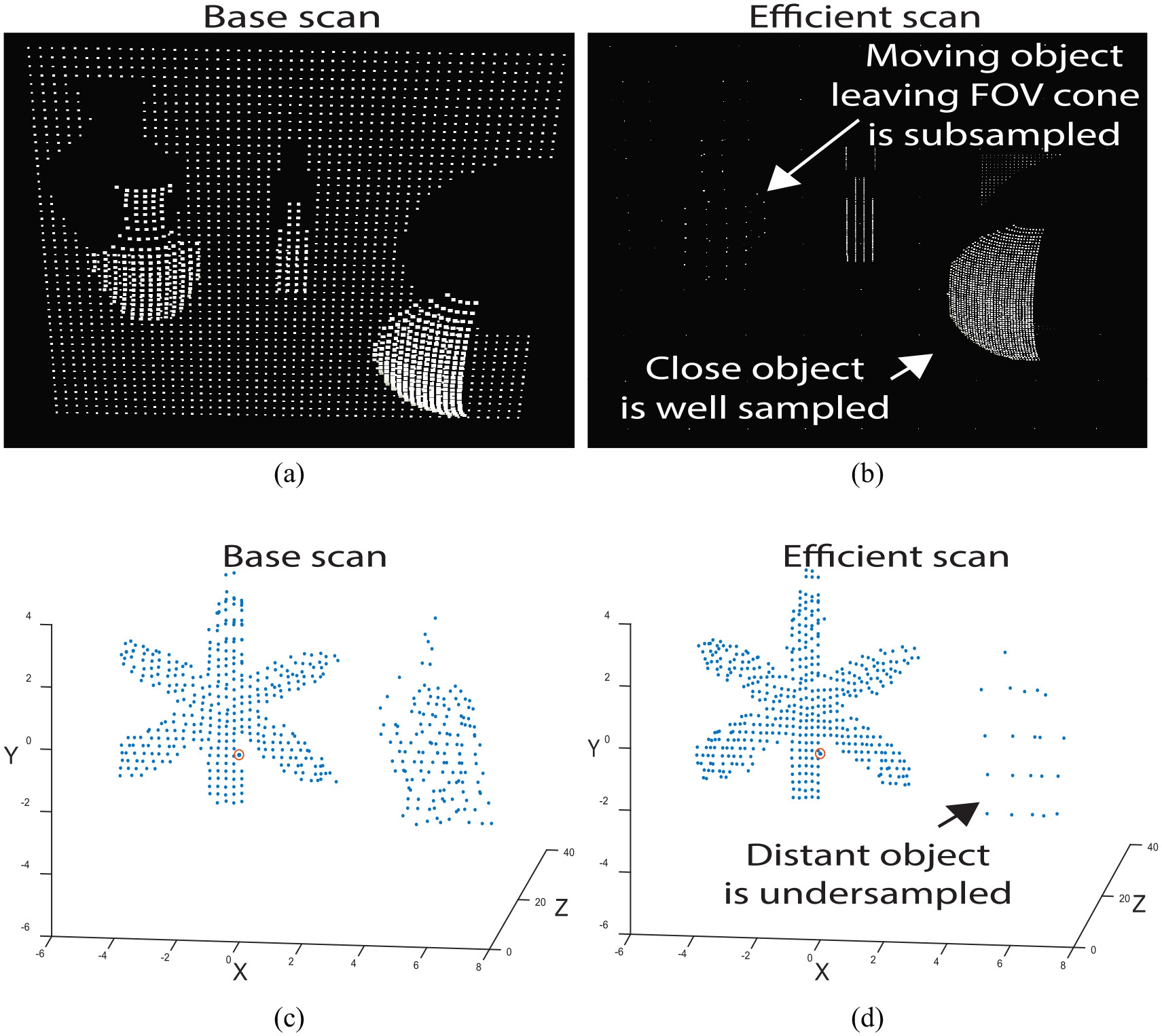

Novel Sensing Methods

Cameras and LiDARs have become the defacto visual sensing methods in robotics and for good reason. However, novel sensing methods can enable more advanced applications. LiDARs can be built with the ability to trade-off range for resolution in some areas and can be designed with the capability to actively make these trade-offs at runtime enabling applications in mapping and exploration. Active cameras with actuation independently of the robot motion can be used to optimize the field of view (FOV) in novel ways. Multi-spectral and hyperspectral cameras, especially in the infrared regions, can be used to sense different materials enabling better object classification and navigability analysis in unstructured environments.

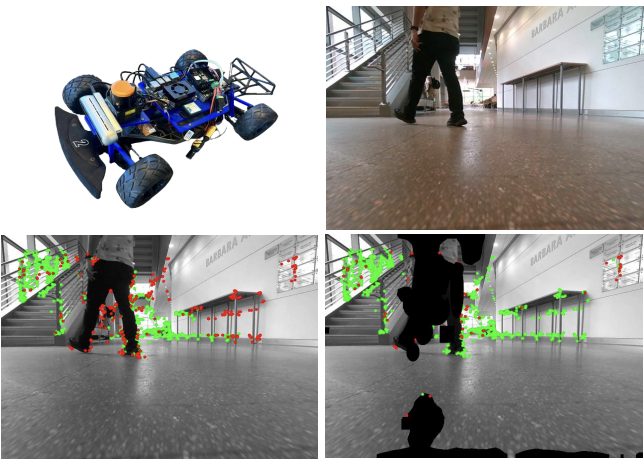

Robust Feature Learning Using Differential Parameters

Historically, feature-based approaches have been used extensively for camera-based robot perception tasks such as localization, mapping, tracking, and others. Several of these approaches also combine other sensors (inertial sensing, for example) to perform combined state estimation. Our group works in the domain of adapting mechanisms that identify visual features that best correspond to robot motion as estimated by an external signal. Specifically, we utilize the robot’s transformations through an external signal (inertial sensing, for example) and pay attention to image space that is most consistent with the external signal. This approach enables us to incorporate information from the robot’s perspective instead of solely relying on the image attributes. The attained image space is optimized with less number of outliers which results in more accurate trajectory estimation, reduced reprojection errors, and less execution time.

Autonomous Racing

Autonomous racing, particularly within the context of the F1Tenth competition, represents an exciting and innovative intersection of robotics, artificial intelligence, and motorsports. It serves as a rigorous testbed for research in autonomous vehicle technologies, offering a practical and competitive environment to push the boundaries of what’s possible. Our work focuses on the control and planning aspects. KFC proposes a novel differential flatness based trajectory tracking controller that outperforms the SOTA while requiring half the computational resources. SAGAF1T focuses on computing the raceline i.e. the path that guarantees best laptimes on a given track. Together, these form a complete control and planning pipeline